Architectural Model Explorer

Virtual Reality Thesis

2019

#C# #Unity #VR #HTCVive #Research

This VR application was developed as part of my undergraduate research project in my final year studying Computer Science at Ryerson University. It explores how a small-scale 1:10 model of a building can be interacted with to help navigate a full-scale model of the same building in Virtual Reality.

It was developed in Unity with C# and SteamVR, and is playable with the HTC Vive on PC.

Scene Description

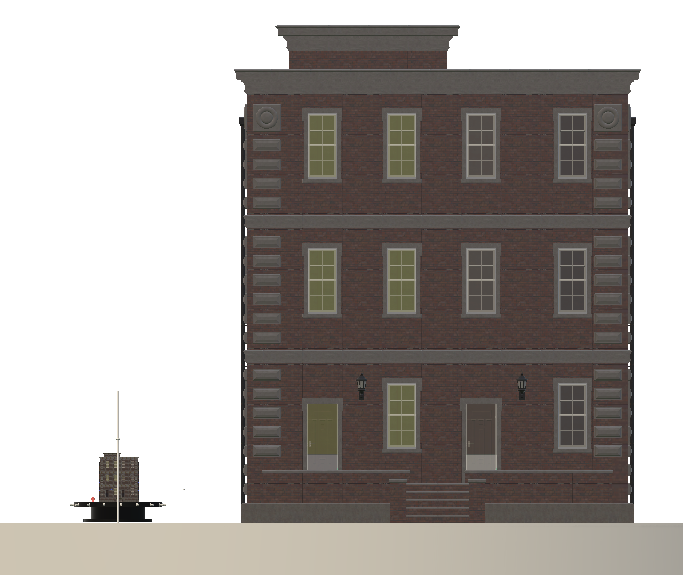

This program consists of only one scene, which includes a skybox. There is a 1:1 (small-scale) model and 1:10 (full-scale) model of the Brick Building prefab sitting atop of a circular ground-plane. The small-scale model is located next to the full-scale model, but can be moved to wherever the player chooses. It is an exact copy of the full-scale model, with the same level of detail in the interiors and exteriors. Note that there is no furniture within the models. When the program begins, the user is virtually standing in front of the two models, and is at a 1:1 human scale. The player’s scale never changes.

Orthographic back view of the scene.

Perspective view of the entire scene.

Orthographic top-down view of the scene with exposed floor plans.

Small-Scale Model Tool

The small-scale model exists as a navigational aid and companion for spatial awareness. It sits atop of a black, round table. The table can be rotated, which is indicated by the white spoke handles all around it. There is also a cross-section cutting plane that can be moved up and down to slice the small-scale model horizontally. Any part of the model above the cutting plane will be ghosted (very translucent) so that the user can view the interiors. The cutting plane is represented by a translucent blue square, attached to a white pole apparatus at a joint. This apparatus exists to indicate to the user that the cutting plane can move along the vertical axis. Within the small-scale model is a red, humanoid, small-scale avatar that dynamically moves to represent the location of the user in the full-scale model. The entire small-scale model, with the table and cutting plane, can be moved to whichever point on the ground/floor the user chooses. If the small-scale model is within the full-scale model, then the full-scale model’s walls will animate away to avoid the two models from mesh clipping.

Perspective view of the small-scale model tool.

Orthographic side view of the small-scale model tool.

Navigation

The user can navigate the full-scale space through teleportation, and can do so using two different methods. The first is local teleportation, which is done by pointing at a spot on the floor or ground around the user in the full-scale model and choosing to teleport there. The second would be through small-scale model teleportation, which would have the user pointing at spot on one of the floors in the small-scale model and teleporting there within the full-scale model. The valid navigational surfaces are the ground plane, all three floors of the building, and the staircases within the building. A ghosted avatar is used to represent the teleportation point of the user, and can be pre-oriented before teleportation to help with user-orientation. If the user, for example, teleports to a corner and is facing a wall, they’d have to physically turn around to re-orient themselves; however, pre-orienting the avatar can solve this issue beforehand.

Interaction

The user only requires one Vive controller and two buttons: the trackpad and the trigger. All interaction is done using the same method. Interaction is performed using a style of the HOMER technique, with selection being achieved via ray casting. A straight, raycasted line extends from the end of the controller; if it intersects something that can be interacted with, it will change colour and the object will highlight with that colour, depending on what the object is (red for a point on the ground/floor, orange for the cutting plane, purple for the small-scale model table). A ghosted hand will appear at the selected object to indicate that it can be grabbed. This essentially “selects” the object. Pressing the trigger “grabs” the object remotely, and a curved, tethered line appears to indicate a connection between the controller and the hand at the grab point. Manipulation of an object is only possible if it is grabbed; once something is grabbed, it can be performed using two optional methods: directional buttons that appear on the virtual controller’s trackpad, or hand motion. The directional buttons allow for more precise, discrete, and un-constrained level of control, while hand motion allows for a quicker, natural, and intuitive level of control with pseudo physics.

1) Small-Scale Model Turntable Rotation

Pointing at the turntable to select it.

Rotating the small-scale model using hand motion.

Rotating the small-scale model using directional buttons.

2) Small-Scale Model Cutting Plane Translation

Pointing at the cutting plane apparatus to select it.

Translating the cutting plane using hand motion.

Translating the cutting plane using directional buttons.

3a) Teleportation Using Small-Scale Avatar

Pointing at a spot on the floor to select as the point to teleport to in the full-scale model.

Changing avatar orientation using hand motion, then teleporting.

Changing avatar orientation using directional buttons, then teleporting.

3b) Teleportation Using Full-Scale Avatar

Pointing at a spot on the floor to select as the point to teleport to in the full-scale model.

Changing avatar orientation using directional buttons, then teleporting.

4) Small-Scale Model Table Relocation

Relocating the small-scale model table to the spot in front of the user.

Relocating the small-scale model table to several different spots on the floor.

User Study

The effectiveness of the program’s design was tested through a user study, and each participant was tested in the same way. They were prefaced with some information before starting, then the headset was placed on their head and the test would begin.

They were given enough information to get started, but details were purposely left out. I wanted to observe which interaction methods they learned on their own, and which they preferred. Therefore, they were given contextual information about the environment, controls, and the fact that they could move around in multiple ways and interact by pointing and grabbing. They were left to bridge the gap between what they wanted to do, and the method in which to do it.

Their task was to physically reach out and touch a manually placed orb floating in space. How they got to it was their choice, and this choice is what was observed. Each time they touch an orb, it moves to a new, preset location, and they’d have to find and touch it again, 10 times in total. The only hint of the orb’s locations is a green arrow pointing to it, floating above the controller. If the participant did not figure out all interaction/navigation methods by the 5th orb, they would be told everything through a quick, verbal tutorial.

Doing this enabled the analysis of self-learning, while also observing how they chose to operate once they knew of everything they could do within the program. For example, the 2nd orb is on the first floor of the building while the 3rd orb was on the third floor of the building, directly above. It was interesting to see if they chose to teleport along the floor, and up the stairs to the third floor, or instantly teleported there using the small-scale model.

Before touching the first orb, notice how the green arrow is pointing at it.

Just after touching the first orb.

Here is the Abstract from my research paper:

'Designing an Architectural Model Explorer for Intuitive Interaction and Navigation in Virtual Reality'

Abstract

The availability of modern virtual reality (VR) technology provides increasingly immersive ways for the average person to observe and view building models. In this paper, prior research of multi-scale virtual environments, interaction and navigation methods, and existing commercial software were combined and extended to design a simple and intuitive architectural model explorer in VR. Specifically, this paper aimed at understanding how such a program should interface with a naïve user, that is, someone inexperienced with VR and architecture. A user study was conducted with 10 participants to observe the holistic effectiveness of the design decisions of the created program, such as the navigational benefits of the moveable small-scale model and its WIM-like interface.

The program was developed in Unity for the HTC Vive VR headset and was designed to include a full-scale model of a building and a small-scale 1:10 version of the same building within the same virtual space. The small-scale model can be moved wherever the user chooses or hidden completely. It can also be rotated and sectioned-off using a cutting plane. The user can teleport within the full-scale model by selecting a point on the ground around them or by choosing a point in the small-scale model. All interaction methods, including navigation, are initiated using a remote point and grab technique, combined with visual cues like colour changes, highlights, and affordances. Once something is grabbed, it can be manipulated using either hand motion or directional buttons on the controller’s trackpad. With multiple options for teleportation and manipulation techniques, each user was given a navigational task and recorded and surveyed to understand their preferences and/or frustrations.

The results of the user study showed that the design of the program was mostly a success and that the small-scale building model used is a great multi-purpose tool for navigational aid. Being able to teleport both locally and using the small-scale model is advantageous. Pointing and grabbing for selection is useful for self-discovery and quick learning, and indication through magic/affordance is especially important. Inexperienced VR users instinctively used motion for movement and manipulation. It was therefore good enough for general usage, especially since users did not care to use the directional buttons for more precision.